Thinking of AI as nuclear powered chimeric puppies might help shake the idea that AI agents are omniscient; at the very least, it may be useful to suppress the anthropomorphic bias one may have when thinking about AI, and remind oneself that it takes a lot of power to feed these dogs, and a lot of time to train them.

Nuclear Powered Chimeric AI Puppies

Agentic AI presents as effectively very well-trained puppies that can do a lot of very elaborate tricks, but are still just (almost) puppies.

Like puppies, they can be fooled and make mistakes. They can be trained, but they will do bad things and need to be disciplined. They are eager to please, though not because of social pack cohesiveness “wiring”, but because they are “wired” to respond to prompts. They can mimic and emulate behaviors that people see as socialable, because they have been trained to do so.

Currently, they cannot reach the “higher” levels of mental activity that humans can attain, and their “hard-wiring” is very different from humans and other animals (let’s be thankful for that, otherwise the term AI revolution would take on a whole different meaning than it has over the past decade, and we’d be in SkyNet territory).

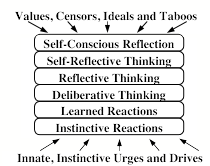

Marvin Minsky’s Levels of Mental Activity

In Marvin Minsky’s (the late AI researcher for those of you not in the know) “The Emotion Machine”, Part 5 deals with levels of mental activity, which he builds up throughout the chapter until he gets to this diagram (copied embedded here from source above):

Starting at the bottom, we first encounter:

Innate, Instinctive Urges and Drives

A real puppy is a distinct biological entity, with organism limited scope. Everything about it is “hard-wired” into its biochemistry. I don’t subscribe to the idea of instincts, so I’d prefer to just use “innate signals and distresses”, but I’m quoting Minsky so it is what it is. Think, hunger, thirst, sleep, pain, etc. a whole bunch of internal signals.

AI Agents are software on hardware. Anything that would approach the internal signalling of puppies might be mapped to hardware and software monitoring of system health and power levels, but if the agent doesn’t receive those signals (or received them in a prioritized manner), the signals are not part of their “mental” activity, and do not contribute to their output. The closest “real” analogy would be the absolute need to respond to a prompt.

Instinctive Reactions

These types reactions are hard-wired emotive responses limited by the ability of the reactor to activate some change in the environment, typically in response to one of the innate urges mentioned above. Puppies bark (or yip) when very young. They whine when they are distressed. They growl when they feel threatened. They wag their tails when they are happy.

AI agents don’t have instinctive reactions, although they are “programmed” to respond to prompts. Prompts are analgous to the urges that drive AI behavior. An AI agent doesn’t ignore prompts. AI doesn’t have deep “guttoral” or emotive reactions.

Learned Reactions

Puppies can learn to respond to commands, and can learn behaviors through positive and negative reinforcement. They can learn tricks, and can learn to associate certain actions with rewards or punishments. The extent of their learning is limited since the hard-wiring of their signals and capabilities to perform are also limited by their form, biology and ultimately their genetics (which by embryology and development constrains their physical capabilities).

“Learned” reactions is where (ostensibly) most AI is operating today, since AI is “driven” by prompts and training. Unlike “rote” learned responses, AI can parse and normalize prompts to select statistically likely responses. When the teleogical (ie, human-driven purposeful) links between prompts and expected responses fall outside of training data, AI can fail spectacularly.

Deliberative Thinking

Deliberative thinking is choosing between alternatives that one can create unrealized but plausible mental models for. It requires a level of abstraction and foresight. No guarantee that the models are accurate objectively, but at least they are plausible to the deliberator.

Puppies don’t really deliberate. Not in any meaningful sense.

AI doesn’t really deliberate either. I’d like to think that using an AI to monitor another AI’s processing and outputs might be a form of deliberation, but unless that “monitor” is able to influence the original AI’s processing in real time, it is more like a censor than a deliberator.

Reflective Thinking

Reflective thinking is about placing oneself in one’s models, both in terms of anaylsis of pre-conditions and as an object affected by the results of ones actions. It is a necessary precursor to self-awareness and self-consciousness.

We’re way past puppies here. Puppies don’t reflect on their actions or thoughts. I’ve seen people fail to train puppies properly, because they assume the puppy can feel shame or pride, which they just can’t.

AI doesn’t reflect on its actions or thoughts either. Humans that drive prompts can reflect on the outputs of AI and continue to drive the AI (hoping it will learn), but the AI itself is not capable of reflection. It doesn’t care about how it is perceived by humans or other agents, because it doesn’t perceive itself at all. AI can simulate reflection, but it’s really a learned mimicry of human reflective outputs.

It should be noted that most AI models are not models of action and thought, but models of request and response.

Self-Reflective Thinking

Alright, apart from cartoons and science fiction (aka, science fantasy), puppies don’t do this.

Neither does AI.

In some senses, this isn’t done regularly or systematically by humans either.

There is no hard rule that to be human you have to regularly self-reflect, and there is

no guidance on how one should go about it.

Self-Conscious Reflection

We’re in the stratosphere now. We’ve left puppies and AI far behind.

This level of mentality if about being aware of oneself as an entity that performs

all the mental actions described above, and being able to analyze and attempt to alter

the program of those actions (hopefully to improve one’s capabilites).

Like with any reflective thinking, there is no guarantee of objective accuracy or success.

Prompt Engineers: Dog Trainers and Handlers?

Alright, bringing it back to the central thesis. If AI are puppies, then model trainer are dog trainers and prompt engineers are handlers. They can teach the AI to do tricks, and can discipline them when they misbehave. But ultimately, AI still emulates superior puppies.

These are very powerful puppies, and can do lots of elaborate tricks. They can take on many forms, being chimeric and can simulate many different types of behavior. With the right training and agentic access can affect many different systems and environments.

AI Equivalent of Puppy Mills

Since just about anyone can set up some AI enterprise, and the cost of entry is relatively low, there is a risk of market saturation with low-quality AI systems, akin to puppy mills. No real animals are harmed in this analogy, but low-quality AI systems can lead to poor user experiences, misinformation, and potential harm if the AI is used in critical applications.

That is not my dog…

Also, if AI puppies are being trained by one organization and handled (prompted) by another, there is a concern on who is ultimately responsible for the AI’s actions and outputs. In most jurisdictions in the United States, the owner of the dog is responsible for its actions.

For agentic AI, the prompt engineer (handler) probably has the most direct “final” influence over the AI’s behavior, and is acting as the monitor and director of the AI’s actions. I am sure that contracts for AI usage will increasingly include clauses that assign responsibility and assurances of due diligence to trainers as well as liability assumptions for handlers.

Looming Debt-Based Costs

With AI investment being “hot”, there is the investor risk of market saturation, as well as rapid technologic obsolescence requiring ever more investment to stay competitive. Beyond hardware and software costs, this includes costs for model training and power infrastructure.

The capital and operational expenditure required to maintain and improve AI systems encompasses processing hardware (GPUs?) with compatible memory (commoditized), network access, power infrastructure, and training time for models (specialized).

GPUs and memory depreciate quickly, training is chasing market needs for answers, and must be met by willingness to pay. When multiple suppliers rush to meet demand, margins become competitive and investment eventually looks for return both in the AI space and in the enterprises that use AI services.

More Power!

AI takes power. So much so that to feed it, we need more power plants.

Hence, nuclear, which isn’t just me, but Microsoft at this point.

Conclusion

Time will tell if most of this is howling at the moon, but when one stops anthropomorphizing AI, the easier it may be to deal with.